Adding a Log Type

A Log Type is a clear definition of the format in which an application writes logs. Site24x7 supports more than 100 different log types by default. Enable AppLogs, create a Log Profile, associate a Log Type to it and start collecting, analyzing, and managing your logs. You can also create custom log types in addition to what Site24x7 supports by default.

Adding a custom log type

Here is a how-to video to create and associate a log type with a log profile:

If your log type isn't listed on the list of supported log types, you can create custom log types and define them.

Go to Admin > AppLogs > Log Types > Add Log Type.

- Display Name: Enter a display name.

- Maximum Upload Limit: The maximum amount of logs (for this log type) that you could upload during the current billing cycle. This field will be visible only to users who purchase Log Management add-ons.

- Search Retention (days): You can choose from the predefined Search Retention (days) options, 7, 15, 30, 60, or 90, to retain your logs. This setting specifies the number of days the collected log data will be stored and available for search in Site24x7. The default value is 30 days. Know more.

- Auto Discovery: Toggle Enable to automatically look for this log format across any new servers that have been associated with this log type and start to upload them.

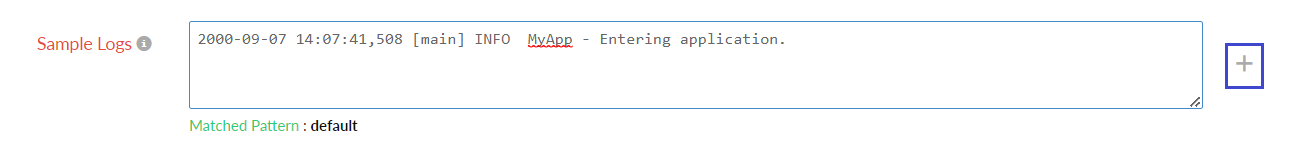

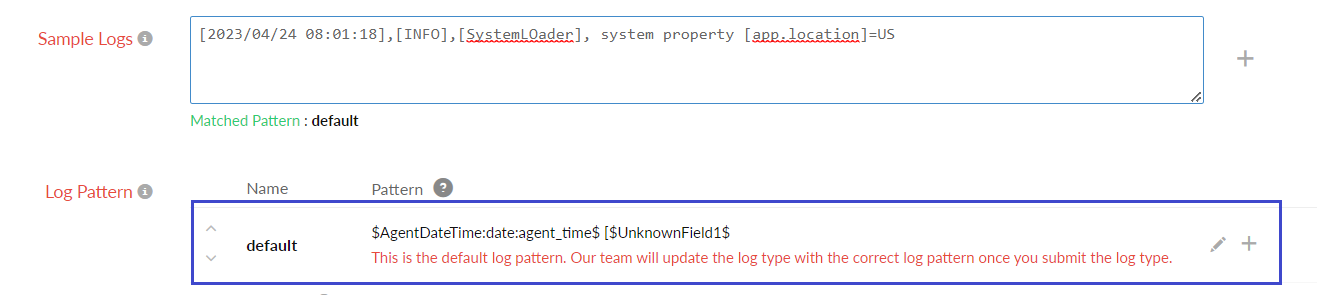

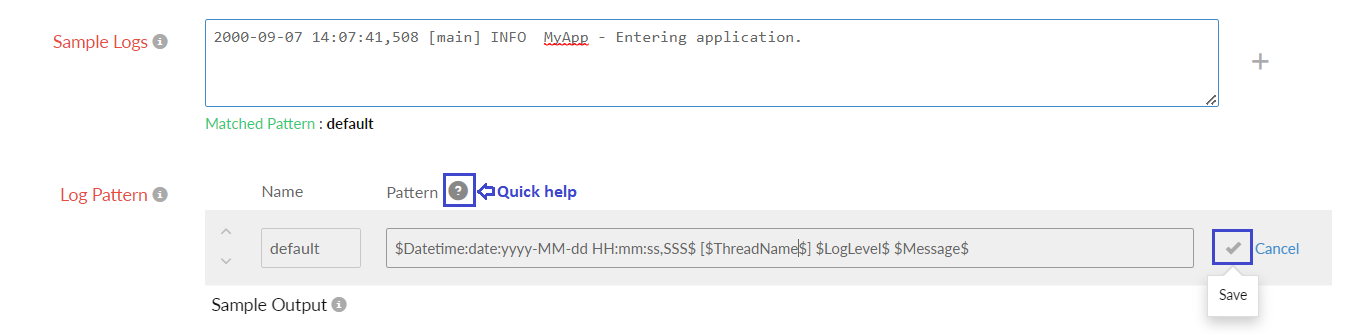

- Sample Logs: Provide sample log lines to discover the log pattern. Click the + icon to add multiple logs. AppLogs provides multiple log patterns to combine different log patterns in a single log type. AppLogs also supports multiline, JSON, Key-value, and XML format logs.

- Log Pattern: Log Pattern is the format in which Site24x7 parses your logs. This can be customized as per your requirements. You can also submit the log type with a default generated pattern. Our team will verify and update the log type with the correct log pattern once you submit the log type.

NoteFor some sample logs, log patterns are identified and generated by default. In such a case, replace the default name with a relevant field name. Refer to this section to create a custom log pattern.

NoteFor some sample logs, log patterns are identified and generated by default. In such a case, replace the default name with a relevant field name. Refer to this section to create a custom log pattern.When you submit a log type with a default pattern using AgentDateTime, a message will appear after saving. If no valid date and time value is available in the log line, we have used AgentDateTime as the date format. This means the log line will display the time the log was read by our agent.

Whenever a log type is created with the default AgentDateTime, our team will verify if it is valid. If it is invalid, the log pattern will be updated with the correct date-time format.

If you have a valid date and time format in your logs, refer to the Defining a Date Field section to configure the correct date field.

Once you define the Pattern and enter the Name, click the

icon to save the pattern. You can also click the quick help icon for log pattern syntax help.

icon to save the pattern. You can also click the quick help icon for log pattern syntax help.

If you still need help creating the log pattern, you can contact support@site24x7.com with sample log lines you want to configure.

NoteRefer to the Update field configurations, Filter log lines at source, and Derived field support sections in this document to know the available log field configurations and features.

- Enable API Upload if you want to send logs via an HTTPS endpoint.

- Finally, click Save and associate it to a Log Profile. You can start searching your logs.

Define a log pattern

Every field name should start and end with $ (Ex : $Message$). Custom log pattern can be given in the Log Pattern section with the following syntax:

Log Pattern

$FieldName:DataType:Format$

| Attribute | Description |

| Field Name | Provides a description for an attribute |

| Data Type |

This is the type of data, associated with a FieldName. A FieldName can be of the following DataTypes: |

| Format | Needed only for the "Date" DataType. For the other data types, there is no need for a format |

-

Defining a Number Field

($FieldName:Number$)

Here, Number is the Data Type of the value associated with the Field Name. -

Defining a String Field

($FieldName$) or ($FieldName:String$)

Here, String is the text associated with the Field Name.

(Note: String is the default data type, and hence it need not be separately mentioned). -

Defining a Date Field

($FieldName:Date:Format$)

Here, FieldName is the variable name, Date is the Data Type of that variable. However, a Date variable must be defined with a Format.

For example,$DateTime:date:EEEE MMM dd HH:MM:SS:SSS yyyy$

Date field Sample log Log pattern Tuesday Sep 19 13:34:56.123 2007 - The format should be EEEE MMM dd HH:mm:ss:SSS yyyy Tuesday Sep 19 13:34:56.123 2007 Starting App Server $Datetime:date:EEEEE MMM dd HH:mm:ss.SSS yyyy$ $message$ Sep 19 2007 13:34:56 123456 PST - The format should be MMM dd yyyy HH:mm:ss SSSSS z Sep 19 2007 13:34:56:123456 PST Starting App Server $Datetime:date:MMM dd yyyy HH:mm:ss:SSSSS z$ $message$ 19-09-07 1:34:56 pm -0800 - The format should be yy-MM-dd hh:mm:ss a Z 19-09-07 1:34:56 pm -0800 Starting App Server $Datetime:date:yy-MM-dd hh:mm:ss a Z$ $message$ 13:34:56,262 - The format should be HH:mm:ss,D 13:34:56,262 Starting App Server $Datetime:date:HH:mm:ss,D$ $message$ Tue September 19 13:34:56 - The format should be EEE MMMM dd HH:mm:ss Tue September 19 13:34:56 Starting App Server $Datetime:date:EEE MMMM dd HH:mm:ss$ $message$ For the Unix time (in seconds) 1190234095, the format should be $DateTime:date:unix$ 1190234095 Starting App Server $Datetime:date:unix$ $message$ Supported Date Formats:

Format Requirement Date Format Example Year - 2 digits yy (or) y 17 or 7 Year - 4 digits yyyy 2017 Month - 2 digits MM 07 Month - 3 letters MMM Sep Month Name in full MMMM September Date dd 19 Hours in a day (0-12) hh 1 Hours in a day (0-23) HH 13 Minutes in an hour mm 34 Seconds in a minute ss 56 Milliseconds in a second SSS 123 Time Zone (+0800; -1100)

Time Zone (PST)

Time Zone (-08:00)Z

z

X-0800

PST

+01:00Day in a year D 262 Day Name - 3 letters EEE Tue Day Name in full EEEE Tuesday AM/PM/am/pm a pm Unix time - seconds since epoch unix 1190234095 Unix time - milliseconds since epoch unixm 1190234095123 Unix time - microseconds since epoch unixu 1585597114.768750 Fetching date value from a folder or a file name

NoteThe folder name generally consists of year, month, and date fields only. We will fetch the date value only if the log lines consist of hour, minute, and second values at the end.

$DateTime:date:@folder(yyyy-MM-dd)HH:mm:ss$ $

$DateTime:date:@file(yyyy-MM-dd)HH:mm:ss$ $

$DateTime:date:@filepath(yyyy-MM-dd)HH:mm:ss$ $

For example,

Sample Log11:10:11 CassandraDaemon:init Logging initialized

11:10:12 YamlConfigurationLoader:load Loading settings from file

11:10:13 DatabaseDescriptor:data Data files directoriesLog Pattern

$DateTime:date:@folder(yyyy-MM-dd)HH:mm:ss$ $ClassName$:$Method$ $Message$

File Name: D:\MyWebApp\2020-01-15\process.log

Here, the date value is present in the parent folder of the log file; hence @folder is mentioned in the log pattern.

Collecting logs without the date value in the log line

At times, log lines will have only the time field and not the date value. In such cases, you've to configure the below date pattern to collect logs.

Sample Log

11:10:11 CassandraDaemon:init Logging initialized

11:10:12 YamlConfigurationLoader:load Loading settings from file

11:10:13 DatabaseDescriptor:data Data files directoriesLog Pattern

$DateTime:date:@filedate(yyyy-MM-dd)HH:mm:ss$ $ClassName$:$Method$ $Message$

Here, @filedate will take the date value from the file's last modified date.

Collecting logs without the date and time value in the log line

At times, log lines will not have both the time and date field values. In such cases, you've to configure the below date pattern to collect logs.

Sample Log

CassandraDaemon:init Logging initialized

YamlConfigurationLoader:load Loading settings from file

DatabaseDescriptor:data Data files directoriesLog Pattern

$DateTime:date:agent_time$ $ClassName$:$Method$ $Message$

Here, agent_time will take the agent-installed machine's current time while reading the logs.

Parsing date value from a different language

For example, the below log lines contain date value in Portuguese language.

Sample Logs

Log Entry: 00:00:07 quinta-feira, 10 outubro 2019 Iniciando recebimento de mensagem

Log Entry: 00:00:07 quinta-feira, 10 outubro 2019 Buscando mensagems na fila Quantidade=0

Log Entry: 00:00:08 quinta-feira, 10 outubro 2019 Sucesso ao buscar quantidade: CM_OKLog Pattern

Log Entry: $DateTime:date:pt(HH:mm:ss EEEE, dd MMMM yyyy)$ $Message$

Here, "pt" denotes the language code for Portuguese.

Refer to this document for locale codes for different languages.

-

Defining a Decimal Field

($FieldName:Decimal$)

Here, Decimal is the Data Type of the value associated with the Field Name. Ex : 165.5 -

Defining an IP Field

($FieldName:ip$)

Here, IP is the Data Type of the value associated with the Field Name. It can be either an IPv4 or IPv6 value.

Ex: 192.0.2.1, 2001:0db8:85a3:0:0:8a2e:0370:7334 -

Defining a Word Field

($Filename:word$)

Here, Word is the Data Type of the value associated with the Field Name. Word is simply a subset of String, but the field should contain only one word. If more than one word exists, it should be defined as String. -

Defining a Config field

($FileName:config:@file$)

Here, @file is the config type associated with the Field Name.

Ex: @folder, @file, @ip, @host

($FieldName:config:@filepath$)

Ex: C:\Program Files\cassandra\logs\server.log

Here, if your mention the file path, Site24x7 AppLogs will take the complete path of the file and insert it into that field.

In case, if you want to add a specific folder (Cassandra, for example), you can define the field as below:

$FieldName:config:@filepath:2$

NoteIn the following example, the config field is defined at the end of the log pattern, and there is no space between $Message$ and $FileName:config:@file$.Configure the config field without leaving space after the last field.

To capture both folder and file names in a separate field, use two config fields in the pattern as in the example below:$Datetime:date:dd-MMM-yyyy hh:mm:ss:SSS a$ $Message$$FileName:config:@file$

$Datetime:date:dd-MMM-yyyy hh:mm:ss:SSS a$ $Message$$FileName:config:@file$$FolderName:config:@folder$

-

Defining a Pattern field

This data type is exclusive to JSON files and is used to define the pattern for any of the json object values in the same log.

Pattern 1:

json $log:pattern:$RemoteHost$ $RemoteLogName$ $RemoteUser$ [$DateTimefield:date:dd/EEE/yyyy:HH:mm:ss$] $Method$ $RequestURI$ $Protocol$ $Status:number$ $ResponseSize:number$ $Referer$ $UserAgent$$ $stream$ $time$

Here, the date field is inside the data type pattern field.

Pattern 2:

json $log:pattern:$RemoteHost$ $RemoteLogName$ $RemoteUser$ [$DateTimefield$] $Method$ $RequestURI$ $Protocol$ $Status:number$ $ResponseSize:number$ $Referer$ $UserAgent$$ $stream$ $time:date:yyyy-mm-dd'T'HH:mm:ss.SSS'Z'$

Here, the date field is outside the data type pattern field.

Sample log:

{"log":"172.21.163.159 - - [27/Jul/2020:19:53:11] GET /test.txt HTTP/1.1 200 12 - Mozilla/5.0 (X11; Linux x86_64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/52.0.2743.116 Safari/537.36","stream":"stdout","time":"2020-07-28T11:29:54.295671087Z"}

{"log":"172.21.163.159 - - [27/Jul/2020:19:53:11] GET /test.txt HTTP/1.1 200 12 - Mozilla/5.0 (X11; Linux x86_64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/52.0.2743.116 Safari/537.36","stream":"stdout","time":"2020-07-28T11:29:54.295671087Z"}

{"log":"172.21.163.159 - - [27/Jul/2020:19:53:11] GET /test.txt HTTP/1.1 200 12 - Mozilla/5.0 (X11; Linux x86_64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/52.0.2743.116 Safari/537.36","stream":"stdout","time":"2020-07-28T11:29:54.295671087Z"} -

Defining a JSON object

($FieldName:json-object$)This data type is exclusive to JSON logs.

json $widgetId:number$ $name$ $description$ $params:json-object$ $version$ $timestamp:date:yyyy-MM-dd HH:mm:ss$

Here, json-object is the Data Type of the value associated with the params field name.

Sample log:

{"timestamp":"2024-02-12 12:12:12","name":"Request Count of Sample App","widgetId":12,"description":"Event description","params":{"selected":212,"period":0},"version":"0.1"} -

Defining a JSON array

($FieldName:json-array:($FirstKey$ $SecondKey$ $ThirdKey$)$)

This data type is exclusive to JSON logs.

json $routePlannerRouteId$ $routeDate:date:yyyy-MM-dd'T'HH:mm:ss$ $routeId$ $regionId$ $oprCode$ $stopdetails:json-array:($locationKey$ $attributes$ $routePlannerStopId$ $stopETA$)$

Here, json-array is the Data Type of the value associated with the stopdetails field name.

Sample log:

{"routePlannerRouteId":"10010202","routeId":"10010202","routeDate":"2022-12-09T00:00:00","regionId":"548","oprCode":"initialETA","stopdetails":[{"attributes":"test1","locationKey":"53w4","routePlannerStopId":"257288","stopETA":"2022-12-10T15:27:09-05:00"},{"attributes":"test2","locationKey":"257288","routePlannerStopId":"257288","stopETA":"2022-12-09T13:26:58-05:00"},{"attributes":"test3","locationKey":"442433","routePlannerStopId":"442433","stopETA":"2022-12-10T15:27:09-05:00"}]} -

Escape Special Characters

If the log line has special characters, use ESC(

Sample log with special characters:).

2022-01-12 22:00:29,793 GMT*16.2*Message

2022-01-12 22:00:29,793 GMT*16.2*Message

2022-01-12 22:00:29,793 GMT*16.2*MessageThen the log pattern should be defined as:

$Datetime:date:yyyy-MM-dd HH:mm:ss,S z$ESC(*)$Version$ESC(*)$Message$

Sample log patterns

The following log patterns are supported:

- Simple log pattern

- Log pattern with some default character

- Log pattern with custom date format

- Log pattern with field exclusion

-

Simple log pattern:

NoteIn this pattern, all the fields are simply separated by space.

Sample log:

3489 M 04 Mar 09:13:40.537 # WARNING: The TCP backlog setting of 511 cannot be enforcedLog pattern:

$PID:number$ $Role$ $DateTime:date$ $LogLevel$ $Message$

Field Name Field Value PID 3489 Role M DateTime 04 Mar 09:13:40.537 LogLevel # Message WARNING: The TCP backlog setting of 511 cannot be enforced

-

Log pattern with some default character:

NoteIn the cases where a fixed letter or a word gets repeated in all the log lines, they could be excluded by mentioning them in the log pattern. We have excluded the characters like [,],*,: in the below example.

Sample log:

2017/08/01 01:05:50 [error] 28148#1452: *154 FastCGI sent in stderrLog pattern:

$DateTime:date$ [$LogLevel$] $ProcessId:number$#$ThreadId:number$: *$UniqueId:number$ $Message$

Field Name Field Value DateTime 2017/08/01 01:05:50 LogLevel error ProcessId 28148 ThreadId 1452 UniqueId 154 Message FastCGI sent in stderr

-

Log pattern with custom date format:

NoteIf the sample log contains a different date format, the user has to give the exact date format in the log pattern.

Sample log:

demo_user demo_db 192.168.22.10 58241 2018-01-08 11:58:23 AEDT FATAL: no pghba.conf entry for hostLog pattern:

$User$ $DB$ $RemoteIP$ $PID$ $DateTime:date:yyyy-MM-dd HH:mm:ss z$ $LogLevel$: $Message$

Field Name Field Value User demo_user DB demo_db RemoteIP 192.168.22.10 PID 58241 DateTime 2018-01-08 11:58:23 AEDT LogLevel FATAL Message no pghba.conf entry for host

-

Log pattern with some field exclusion:

NoteThere are some cases where not all log lines have the same number of fields. Some log lines may have five fields while the other has four fields. In such a case, you must exclude the missing field using the '!' symbol in the log pattern. In the following example, "ProcessId" is missing in the second log line, so we have excluded that field in the log pattern.

Sample log:

Aug 7 07:35:02 log-host systemd[1]: Stopping CUPS Scheduler

Aug 7 08:40:02 log-host kernel: 817216.167300] audit: type=1400Log pattern:

$DateTime:date$ $Host$ $Application$![$ProcessId$]!: $Message$

Field Name Field Value - Line 1 Field Value - Line 2 DateTime Aug 7 07:35:02 Aug 7 08:40:02 Host log-host log-host Application systemd kernel ProcessId 1 - Message Stopping CUPS Scheduler 817216.167300] audit: type=1400

Sending logs via an API endpoint

Site24x7 allows you to send logs to AppLogs through an HTTPS endpoint.

Update field configurations

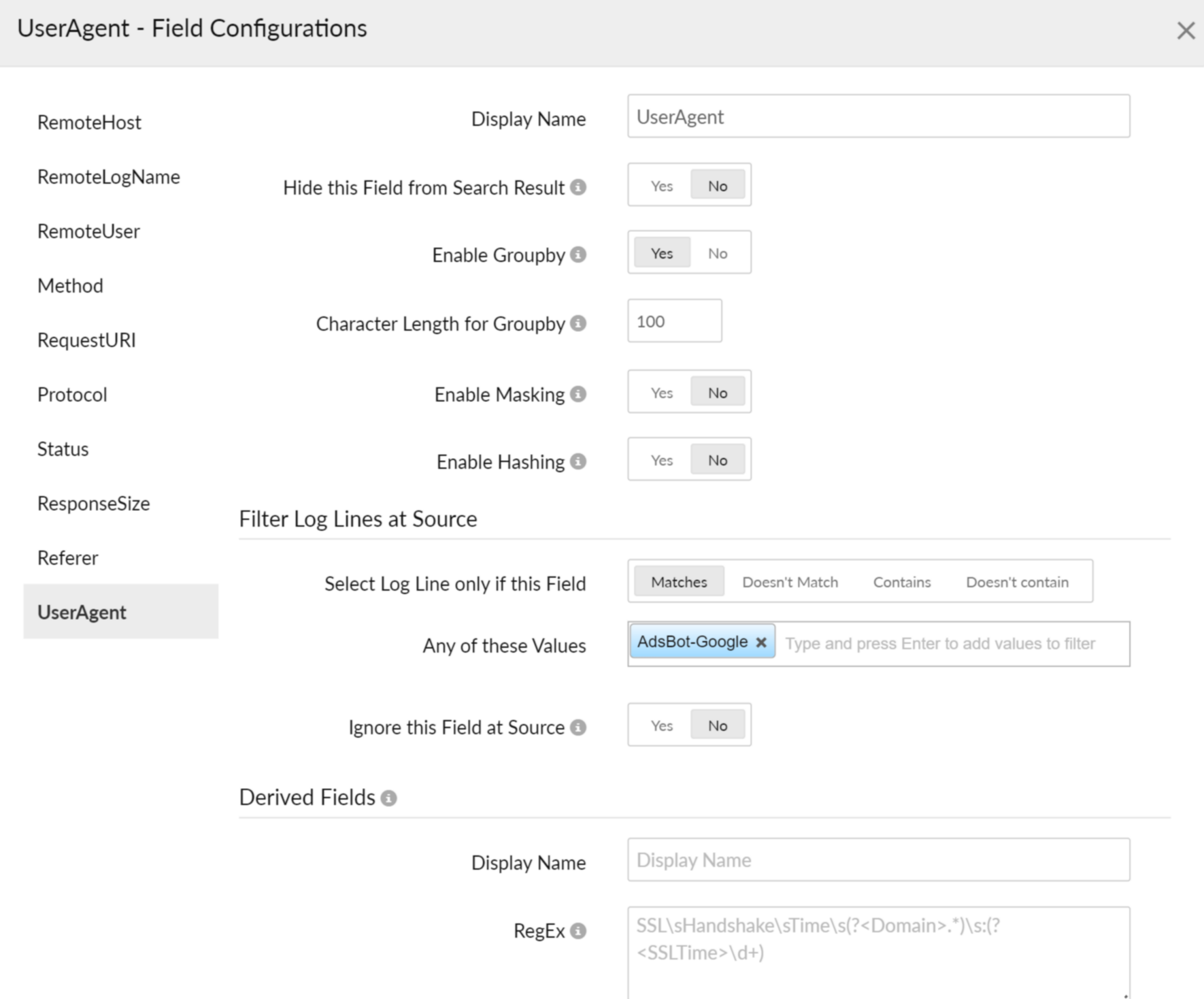

- In the Sample Output table, hover over a field name to find the

icon and click on it.

icon and click on it. - In the ThreadId - Field Configurations window that opens, choose the required field from the left pane and fill in the options on the right side.

- Display Name: The name will be automatically appended based on the field you choose from the left pane.

- Unit for this Field: Choose a suitable unit. This option is for number fields only.

- Toggle to Yesagainst the following options, if you wish to enable them.

- Enable Groupby: Group the entries with the same value.

- Character Length for Groupby: Specify the number of characters that should be displayed in Groupby query output. You can add upto 200 characters for a field.

- Hide this Field from Search Result: Hide this particular field when you view the search results.

- Enable Masking: Toggle to Yes to enable masking. Provide the expression for the data to be masked as a capture group in the regex and the mask string.

- Enable Hashing: Toggle to Yes to enable hashing. Provide the expression and include the data to be hashed as a capture group in the regex. Learn more about masking and hashing.

Filter log Lines at source

- Select Log Lines only if this Field: Choose from the options: Matches, Doesn't Match, Contains, Doesn't Contain to select the log lines that satisfy the value in Any of these Values field added below.

Consider the examples below:

- If you don't want to collect INFO logs, select Doesn't Match and enter INFO in the Any of these Values field below.

- If you want to collect only the logs that contain the word system, select Contains, and enter system in Any of these Values field below.

Note

The values in the filters are case-sensitive.

- Any of these Values: Enter a value for the above-specified condition.

- Ignore this Field at Source: Toggle to Yes to ignore that particular field at the agent-side itself, before uploading.

- Click Apply.

Derived field support

Using derived field support, you can define a regex for the unparsed fields to extract necessary information.

Log Tagging

Use log tagging to categorize and filter logs effectively. Apply predefined or custom log tag rules, search tagged logs, and create alerts for streamlined log management.